Building a Semantic Search Engine aka a RAG System for a ChatBot LLM¶

Harness the Power of Retrieval-Augmentation Generation (RAG) in ChatBot LLM¶

Welcome to the forefront of LLM technology, where we help you create advanced search systems with Extraction-Augmentation Generation (RAG) capabilities. Dive into the world of RAG, a cutting-edge approach that combines the best of search-based and generative AI models to deliver exceptional chatbot performance.

What is a RAG System in ChatBot LLM?¶

A RAG System in ChatBot LLM refers to the integration of retrieval-augmented generation in chatbot applications. This system leverages a two-pronged approach:

- Retrieval-Based Model: Quickly accesses a database of information to find relevant content based on user queries.

- Generative Model: Enhances the retrieved information by generating coherent and contextually enriched responses.

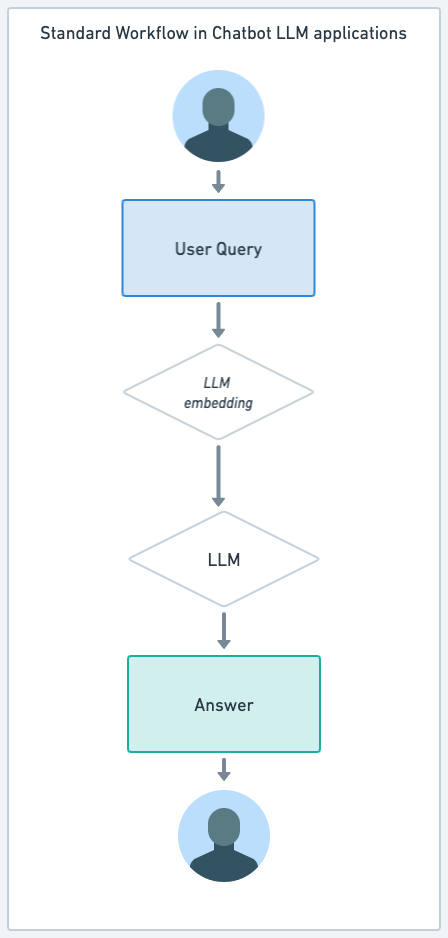

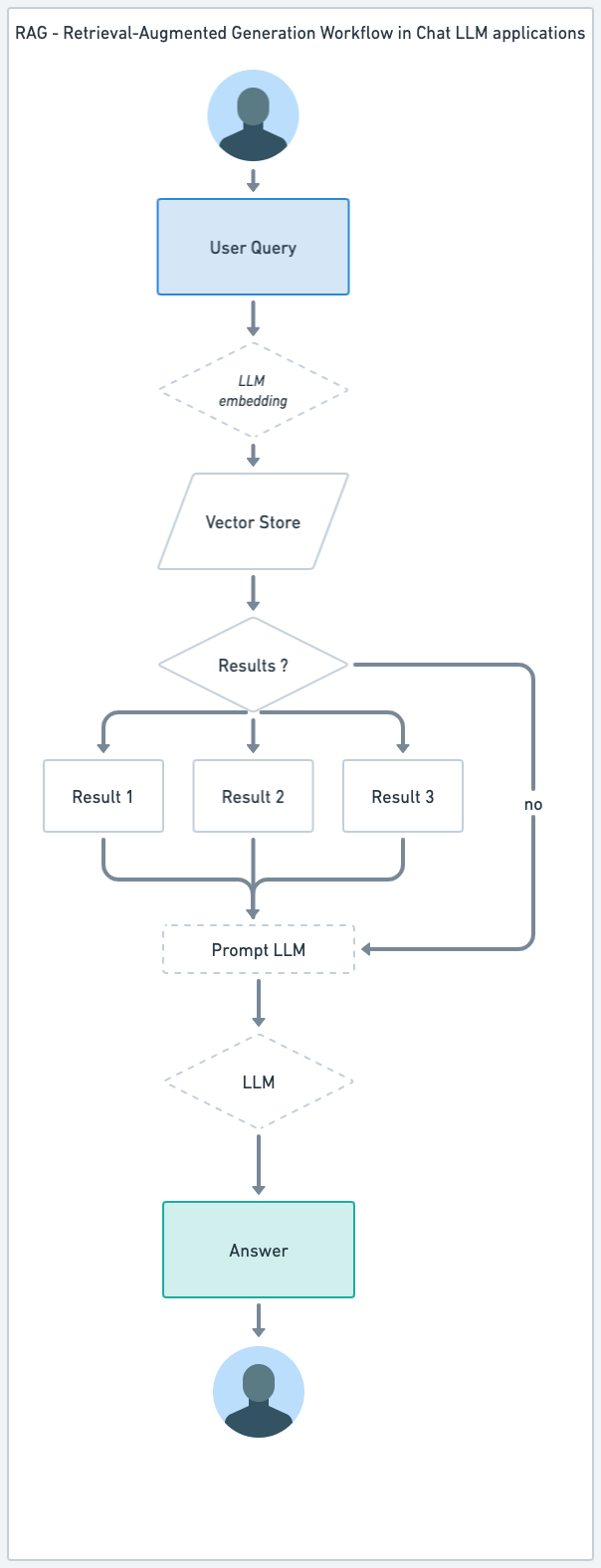

| Standard LLM ChatBot | RAG Workflow for LLM ChatBot |

|---|---|

|

|

Understanding the Difference: Standard LLM Chatbot vs. RAG System¶

In the realm of AI-driven chatbots, two prevalent models are the standard LLM chatbot and the Retrieval-Augmentation Generation (RAG) system. While both utilize advanced AI technologies, their operational frameworks and capabilities differ significantly.

Standard LLM Chatbot (e.g., ChatGPT, GPT-4)¶

- Direct Interaction with LLM Model: Standard LLM chatbots, such as those based on ChatGPT or GPT-4, directly interact with the underlying Large Language Model. They generate responses based on the training and knowledge encapsulated within the LLM itself.

- Generative Responses: These chatbots excel in creating responses from scratch, relying on the vast amount of information and patterns learned during the model training process.

- Adaptability: They are highly adaptable, capable of handling a wide range of topics and queries, but their responses are limited to the knowledge integrated into the model up to its last training update.

RAG System (Retrieval-Augmentation Generation)¶

- Combination of Retrieval and Generative Models: The RAG system is a hybrid approach that combines a retrieval-based model with a generative LLM like GPT-4. It first retrieves information from a specialized vector store database and then uses a generative model to augment and contextualize this information.

- Use of Vector Store Database: The RAG system employs a vector store for efficient retrieval of relevant data. This database houses high-dimensional vector representations of information, allowing for rapid and precise data retrieval based on query similarity.

- Enhanced Relevance and Timeliness: By leveraging the vector store, RAG systems can access the most current information, making them particularly useful in scenarios where up-to-date data is crucial.

- Dynamic and Contextual Responses: The generative component of the RAG system then enriches the retrieved data, ensuring that responses are not only accurate but also contextually nuanced and detailed.

Benefits for Different Roles:¶

For customers, a RAG system enhances their experience by providing more accurate, up-to-date, and contextually relevant information. This leads to higher satisfaction and trust in the provided services.

For employees, the RAG system serves as a powerful tool for accessing the latest organizational knowledge and data. It supports efficient decision-making and problem-solving, thereby boosting productivity and effectiveness in their roles.

In summary, while standard LLM chatbots offer a broad range of generative capabilities, RAG systems elevate this by combining the latest data retrieval techniques with the creative prowess of generative models. This hybrid approach makes RAG systems particularly advantageous in scenarios requiring both accuracy and conversational fluency.

How works the component of a RAG System in ChatBot LLM?¶

1. Retrieval-Based Model:¶

The search-based component of a RAG system in ChatBot LLM provides the basis for delivering accurate and relevant information. Unlike the basic LLM ChatBot, which is directly connected to an LLM model, the RAG allows you to connect to private data models, or to data models created specifically for the use of the LLM chatbot or the LLM search engine.

This model works as follows:

- Rapid Data Access: Utilizing advanced search algorithms using embeddings, the retrieval model swiftly scans through extensive databases to locate information that aligns with user inquiries.

- Relevance Matching: It evaluates the relevance of the retrieved data, ensuring that the responses are directly connected to the user's specific query or need.

- Efficient Integration: Seamlessly integrates with the chatbot’s conversational interface, providing a smooth transition between query input and data retrieval.

2. Generative Model:¶

The generative aspect of the RAG system enhances the chatbot's capabilities by adding a layer of context understanding and response generation. When the personalized databases return their answers, an LLM model works out the response to be displayed to the user. It generates objects requested (see AI use cases) to respond to the user.

This model works as follow :

- Contextual Enrichment: Upon receiving the relevant data from the retrieval model, the generative model interprets and contextualizes it within the framework of the ongoing conversation.

- Personalized Responses: It generates responses that are not only accurate but also tailored to the user's conversational style, preferences, and history, offering a more personalized interaction.

- Dynamic Interaction: The model adapts its responses to suit the evolving context of the conversation, ensuring that each reply is coherent and contextually appropriate, enhancing the overall flow of the dialogue.

Together, the Retrieval-Based and Generative Models within a RAG System create a ChatBot LLM that is not only efficient in data retrieval but also adept in crafting responses that are contextually rich and personalized. This two-pronged approach revolutionizes the way chatbots understand and interact with users, setting a new benchmark in AI-driven communication.

In advanced AI applications, semantic RAG search can become one of the key components, like other generative AI use cases.

Why Implement a semantic search engine based on a RAG System in Your ChatBot LLM?¶

- Enhanced Accuracy and Relevance: Combines precise information retrieval with the creative flexibility of generative models for more accurate and relevant responses.

- Richer User Interactions: Provides detailed, nuanced, and informative replies, elevating the standard of user engagement.

- Versatile Applications: Ideal for scenarios requiring both factual accuracy and conversational fluency, such as customer support, information portals, and educational bots.

The implementation of a Retrieval-Augmentation Generation (RAG) System in your ChatBot LLMs offers a multitude of benefits that significantly enhance the user experience for both customers and employees. Here’s how different roles can leverage the advantages of a RAG system:

Benefits for Customers¶

-

Instant Access to Accurate Information: Customers can receive immediate, factual answers to their queries, improving their experience and satisfaction levels.

-

Personalized Customer Service: The generative model’s ability to tailor responses makes interactions feel more personal and engaging, fostering customer loyalty.

-

Efficient Problem Solving: With its ability to quickly access a wide range of information, the RAG system can effectively address and resolve customer issues or questions, reducing resolution time.

Benefits for Employees¶

-

Streamlined Internal Communication: Employees can utilize the RAG system for quick access to internal knowledge bases, making information retrieval for internal processes more efficient.

-

Enhanced Training and Support: The detailed and nuanced responses provided by the RAG system can be an invaluable tool for employee training and ongoing support.

-

Improved Workflow Management: By handling routine queries and data retrieval, the RAG system allows employees to focus on more complex tasks, thus enhancing productivity and workflow efficiency.

Across the Board Advantages¶

-

Enhanced Accuracy and Relevance: The RAG system's precise data retrieval coupled with generative creativity ensures that all interactions are both accurate and contextually relevant, regardless of whether the user is a customer or an employee.

-

Richer User Interactions: Users enjoy detailed, nuanced, and informative responses, making their interactions with the chatbot more engaging and informative.

-

Versatile Applications: The RAG system is adept in a variety of scenarios, from customer service to internal communications, making it a versatile tool for different aspects of a business.

Implementing a RAG system in your ChatBot LLM offers a transformative approach to handling customer and employee interactions. By combining the strengths of both retrieval and generative models, businesses can provide an enhanced experience that is both efficient and satisfying for all users.

The Process of Building a RAG System¶

- Data Collection and Indexing: Compile and structure a comprehensive database of information relevant to your chatbot’s domain.

- Integration of Retrieval Model: Implement a retrieval model that can efficiently query the database to find pertinent information.

- Augmentation with Generative Model: Enhance the retrieval model with a generative LLM that can expand, contextualize, and personalize the retrieved data.

- Testing and Optimization: Rigorously test and fine-tune the system for optimal performance and accuracy.

Customization and Scalability¶

- Tailored to Your Needs: Our approach to building RAG systems is highly customizable, ensuring that the chatbot aligns with your specific requirements and goals.

- Scalable Solutions: Designed to grow with your needs, our RAG systems can handle increasing volumes of data and user queries.

Future of ChatBot LLM with RAG¶

- Continuous Evolution: As technology advances, expect more sophisticated RAG integrations that push the boundaries of what chatbots can achieve.

- Adaptive Learning: Future RAG systems will continually learn and adapt, becoming even more efficient and user-friendly.

Embark on a journey to redefine digital communication and user engagement with our advanced RAG System for ChatBot LLM. Contact us today to learn how we can tailor a RAG-enhanced ChatBot LLM to transform your business communication.